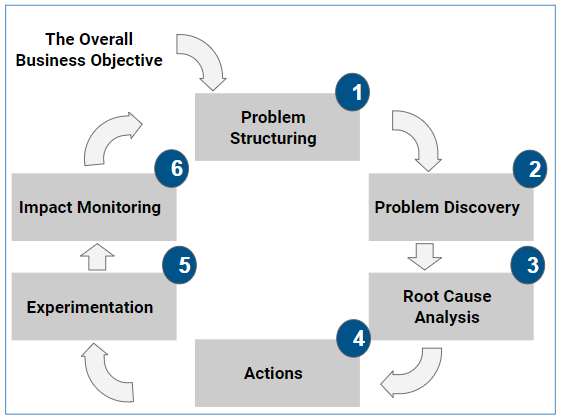

Faster and better decisions with AI-driven self-serve Insights

Incedo LighthouseTM with Self-Serve AI is a cloud-based solution that is creating a significant business impact in Commercial Effectiveness for clients in the pharmaceutical industry. Self-serve entails empowering them with actionable intelligence to serve their business needs by leveraging the low-code AI paradigm. This reduces dependency on data scientists and engineers and makes faster iterations of actionable decisions and monitoring their outcomes by business users.

As internal and external enterprise data continues to grow in size, frequency, and variety, the classical challenges such as sharing information across business units, lack of a single source of truth, accountability, and quality issues (missing data, stale data, etc.) increase.

For IT teams owning diverse data sources, it becomes an added workload to ensure the provisioning of the enterprise-scale data in requisite format, quality, and frequency. This also impedes meeting the ever-growing analytics needs of various BU teams, each having its own request as a priority. Think of the several dashboards floating in the organizations created at the behest of various BU teams, and even if with great effort they are kept updated, it is still tough to extract the insights that will help take direct actions to address critical issues and measure their impact on the ground. Different teams have different interaction patterns, workflows, and unique output requirements – making the job of IT to provide canned solutions in a dynamic business environment very hard.

Self-service intelligence is therefore imperative for organizations to enable business users to make their critical decisions faster every day leveraging the true power of data.

Enablers of self-service AI platform – Incedo LighthouseTM

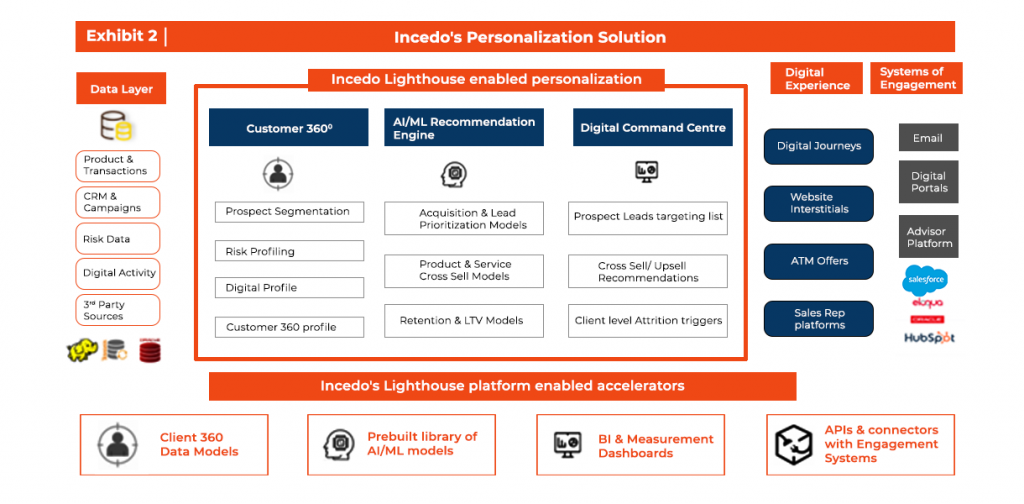

Our AWS cloud-native platform Incedo LighthouseTM, a next-generation, AI-powered Decision Automation platform t arms business executives and decision-makers with actionable insights generation and their assimilation in daily workflows. It is developed as a cloud-native solution leveraging several services and tools from AWS that make the journey of executive decision-making highly efficient at scale. Key features of the platform include:

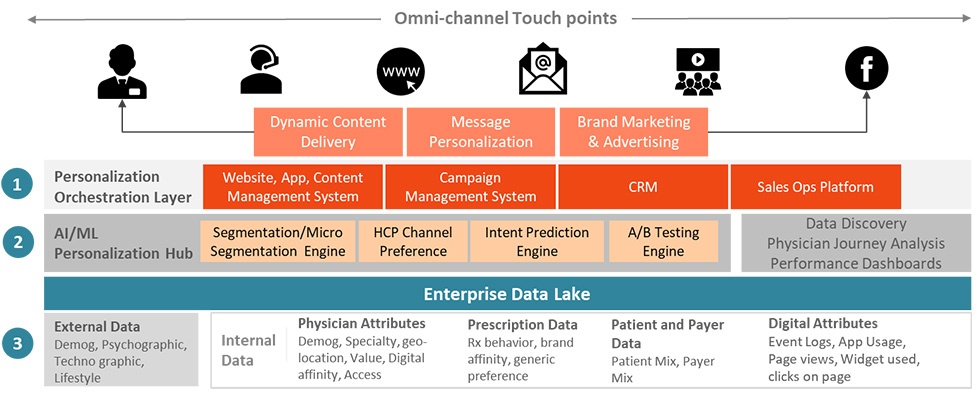

- Customized workflow for each user role: Incedo LighthouseTM is able to cater to different needs of enterprise users based on their role, and address their specific needs:

- Business Analysts: Define the KPIs as business logic from the raw data, and define the inherent relationships present within various KPIs as a tree structure for identifying interconnected issues at a granular level.

- Data Scientists: Develop, train, test, implement, monitor, and retrain the ML models specific to the enterprise use cases on the platform in an end-to-end model management

- Data Engineers: Identify the data quality issues and define remediation , feature extraction, and serving using online analytical processing as a connected process on the platform

- Business Executives: Consume the actionable insights (anomalies, root causes) auto-generated by the platform, define action recommendations, test the actions via controlled experiments, and push confirmed actions into implementation

- Autonomous data and model pipelines: One of the common pain points of the business users is the slow speed of data to insight delivery and share action recommendation, which may take even weeks at times for simple questions asked by a CXO. To address this, the process of insights generation from raw big data and then onto the action recommendation via controlled experimentation has been made autonomous in Incedo LighthouseTM using combined data and model pipelines that are configurable in the hands of the business users.

- Integrable with external systems: Incedo LighthouseTM can be easily integrated with multiple Systems of Record (e.g. various DBs and cloud sources) and Systems of Execution (e.g. SFDC), based on client data source mapping.

- Functional UX: The design of Incedo LighthouseTM is intuitive and easy to use. The workflows are structured and designed in a way that makes it commonsensical for users to click and navigate to the right features to supply inputs (e.g. drafting a KPI tree, publishing the trees, training the models, etc.) and consume the outputs (e.g. anomalies, customer cohorts, experimentation results, etc.). Visualization platforms such as Tableau and PowerBI are natively integrated with Incedo LighthouseTM thereby making it a one-stop shop for insights and actions.

Incedo LighthouseTM in Action: Pharmaceutical CRO use case:

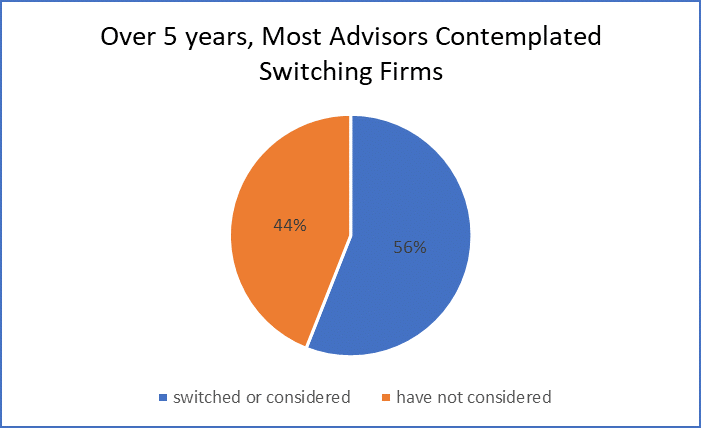

In a recent deployment of Incedo LighthouseTM, the key users were the Commercial and Business Development team of a leading Pharma CRO. The company had drug manufacturers as its customers. Their pain point revolved around the low conversion rates leading to the loss of revenue and added inefficiencies in the targeting process. A key reason behind this was the wrong prioritization of leads from conversion propensity and total lifetime value perspective. This was mainly due to manual, human-judgment-driven, ad-hoc,, static, rule-based identification of leads for the Business Development Associates (BDA) to work on.

Specific challenges that came in the way of the application of data science for lead generation and targeting were:

- The raw data related to the prospects – that was the foundation for f for e predictive lead generation modeling – was in silos inside the client’s tech infrastructure. This led to failure in developing high-accuracy predictive lead generation models in the absence of a common platform to bring the data and models together.

- Even in a few exceptional cases, where the data was stitched together by hand and predictive models built, the team found it difficult to keep the models updated in the absence of integrated data and model pipelines working in tandem.

To overcome these challenges, the Incedo LighthouseTM platform was deployed. The deployment of Incedo LighthouseTM in the AWS cloud environment not only brought about real improvements in target conversions but also helped transform the workflow for the BDAs. By harnessing the power of Data and AI, as well as leveraging essential AWS native services, we achieved efficient deployments and sustained service improvements.

- Combine the information from all data sources for a 360-degree customer view, enabling the BDAs to look at the bigger picture effortlessly. To do so effectively, Incedo LighthouseTM leveraged AWS Glue which provided a cost-effective, user-friendly data integration service. It helped in seamlessly connecting to various data sources, organizing data in a central catalog, and easily managing data pipeline tasks for loading data into a data lake.

- Develop and deploy AI/ML predictive models for conversion propensity using Data Science Workbench which is part of the Incedo LighthouseTM platform, after developing the data engineering pipelines that create a ‘single-version-of-the-truth ’ every time raw data is refreshed. This was done by leveraging the pre-built model accelerators, helping the BDAs sort those prospects in the descending order of their conversion propensity, thereby maximizing the return on the time invested in developing them. The Data Science Workbench also helps with the operationalization of various ML models built in the process, while connecting model outputs to various KPI Trees and powering other custom visualizations. Using the Amazon SageMaker Canvas, Incedo LighthouseTM enables machine learning model creation for non-technical users, offering access to pre-built models and enabling self-service insights, all while streamlining the delivery of compelling results without extensive technical expertise.

- Deliver key insights in a targeted and attention-driving manner to enable BDAs to make the most of the information in a short span of time. Incedo LighthouseTM leverages Amazon QuickSight, a key element in delivering targeted insights, that provides well-designed dashboards, KPI Trees, and intuitive drill-downs to help BDAs and other users make the most of the information quickly. These tools allow leads to be ranked based on model-reported conversion propensity, time-based priority, and various custom filters such as geographies and areas of expertise. BDAs can double-click on individual targets to understand deviations from actuality, review comments from previous BDAs, and decide on the next best actions. QuickSight seamlessly integrates with Next Gen Stats apps, and offers cost-effective scalable BI solutions, interactive dashboards, and natural language queries for a comprehensive and efficient user experience. This resulted in an increased prospect conversion rate due to data-driven automated decisions leveraging AI that are disseminated to BDA in a highly action-oriented way.